Challenges in Enterprise LLM Adoption

We're ecstatic to introduce pulze.ai, your gateway to the expansive world of Large Language Models (LLMs) for enterprise applications. With the wave of LLM adoption initiated by ChatGPT, pulze.ai steps in to address the challenges enterprises face in leveraging these behemoths.

Pulze’s Dynamic LLM Automation Platform allows engineering and product teams to quickly and easily build all leading LLMs into their products at once. One single API provides access to all best-in-class LLMs and efficiently routes requests to make sure users get the fastest and highest quality response at the lowest cost. Built with the future in mind, Pulze automatically lets products support new and better models as they get released. Try Pulze.ai for free today to benefit from leading LLM automation functionality such as fine-tuning, prompt-engineering, benchmarking, dynamic routing, logging and monitoring, and much more.

But let us start a bit earlier….

With the launch of ChatGPT, a broad audience became aware—if not fascinated—of the powers of Large Language Models (LLMs), promising natural language interfaces to all kinds of applications. ChatGPT has truly enabled everyone to leverage and interact with cutting-edge machine learning using natural language, without the need for a massive team of data scientists and machine learning engineers. Ten months later, we still encounter considerable challenges in adopting LLMs for actual applications.

- Choosing the Suitable Model(s): Ever-evolving zoo of different large language models including GPT 3.5, GPT 4, claude, Llama 2, Falcon and many more. Choosing between these models has several tradeoffs, for example

- Accuracy across different tasks and domains,

- Cost,

- Latency,

- Data privacy.

This poses a significant challenge, basically making it almost impossible for non-experts to choose the suitable model for each task.

- Avoiding Hallucinations: LLMs being probabilistic models incurring hallucinations, make relying on the provided answers challenging. Also, especially in sensitive domains such as finance or healthcare, they lack the capability to provide explanations for their predictions.

- Connecting to Custom Knowledge: LLMs are only trained on general data, making the usage and modification of internal company, potentially sensitive, information challenging.

- Task Specific Accuracy: LLMs are general models and hence often incur insufficient performance for specialized tasks.

- Enterprise Manageability: Enabling enterprise requirements such as quota, access management, and logging across different models and providers.

Bottom line: Currently you need access to an expert team to leverage LLMs for non-trivial use cases.

Welcome to Pulze.ai!

It is Pulze’s mission to make the value of LLMs accessible to everyone without the hassle.

In short, Pulze offers a simple way to build business applications on top of LLMs with

- Always access the newest models without changing a line of code.

- Smart routing of prompts to the best model for the given task by Pulze’s own continuously improving classification .

- Connecting LLMs access to custom up-to-date knowledge such as databases or other data sources in a safe and managed way.

- Automatically fine-tuning models for specialized tasks.

- Support for custom models both self-deployed as well as hosted.

- Centralized enterprise-wide management of LLMs, including single accounting accounting, quota, and access control across all LLM providers and models.

Let us look at a simple example at the Pulze Playground (and yes, if you haven’t done so, head over there and start experimenting with LLMs without signup or any code writing).

For everyone already familiar with ChatGPT, the basic setup looks quite familiar. Under the hood, the system is far more intricate than it initially appears. Instead of providing a single result, Pulze evaluates outputs from multiple models. This is in contrast to solutions like ChatGPT, which rely solely on one model. Additionally, each model's output comes with a score, calculated based on your given prompt.

The scoring function can be configured to suit your specific needs: is cost efficiency the number one priority for you or rather response-latency or response-quality?

Outside the playground, Pulze will only query the best-scored model – as opposed to performing parallel requests (of course considering your preference as discussed.)

Why not just use ChatGPT?

We hope by now we have convinced you that Pulze is at least looking cool, but still the question remains whether just using ChatGPT isn’t sufficient….

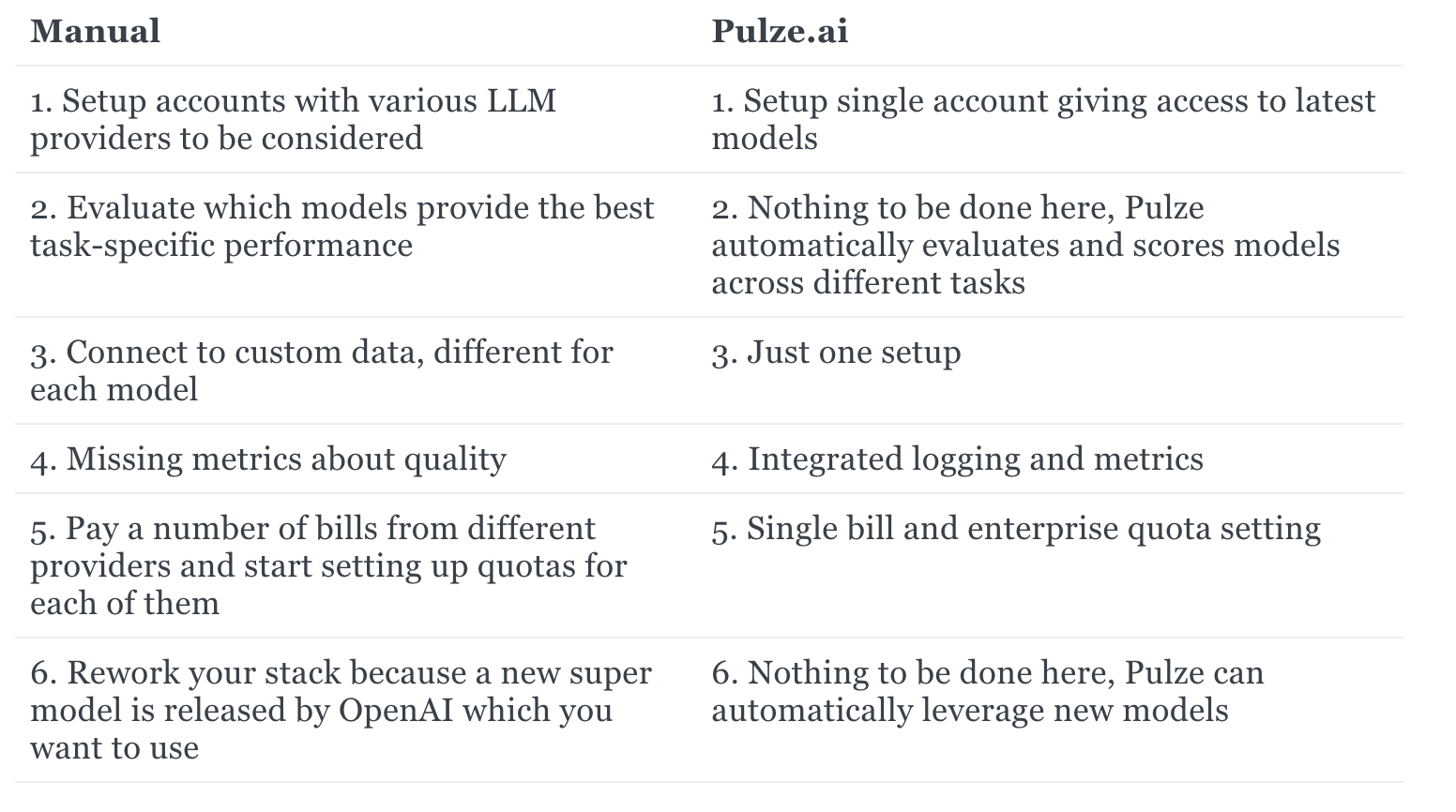

For that, let us take a concrete example and build an app. A company-specific chatbot that can answer specific questions using company internal information e.g., who is the responsible person for a specific task or to summarize task specific information. Next we compare the different steps needed to build a particular app without and with Pulze.

Without Pulze.ai:

- Set up accounts with various LLM providers: This means that if you want access to different language models, you'd need to create and manage multiple accounts for each LLM provider.

- Evaluate task-specific performance across models: One would have to manually test each model to see which performs best for specific tasks, a time-consuming process.

- Model-specific custom data connections: Connecting to custom datasets is often a unique process for each model, requiring more integration effort.

- Lack of consistent quality metrics: There might not be a unified way to measure the quality of responses, leading to possible inconsistency.

- Manage multiple bills and quotas: Keeping track of payments, usage limits, and overages can become complex with multiple providers.

- Constantly update tech stack for new models: Whenever a new and potentially better model gets released, you'd need to change your system's setup to accommodate it.

With Pulze.ai:

- Single account setup for all models: You create just one account on Pulze.ai, and it gives you access to all the latest models. No need to manage multiple accounts.

- Automated model evaluation: Pulze does the hard work for you. It evaluates and scores models based on their performance across different tasks, ensuring optimal model selection.

- Unified custom data connection: No matter which LLM you're using, you only have to set up your custom data connections once with Pulze.

- Integrated metrics system: Pulze provides built-in logging and metrics, giving you a holistic view of model performance and usage.

- Consolidated billing and quota management: Say goodbye to multiple invoices and conflicting quota systems. With Pulze, you get a single bill and can set enterprise-wide usage limits easily.

- Seamless integration of new models: When a new model comes out, there's no need to change anything. Pulze ensures that the best models are always available for you, without any extra effort.

How to get started?

The easiest way to to get started—no signup required—is the Pulze Playground. It makes it very simple to see how different models perform on different tasks. From providing results across different models to customizable scoring functions, the playground is where theory meets practicality.

Supported models currently include the latest from OpenAI, Llama, Falcon, and more. Check out our API documentation for an in-depth look.

For next steps such as connecting to custom data sources, consider the Pulze documentation, especially the tutorial section.

Pulze’s commitment doesn't stop at just LLM integration. We’re constantly enhancing our platform. Our roadmap includes features like more seamless integrations, data source connections, and specialized LLM training tailored for enterprise tasks.

What's on the Horizon for Pulze.ai?

Our vision is expansive:

- Custom Pulze LLMs: We're exploring custom models tailored for specific enterprise tasks.

- Enhanced Integration Options: Aimed at even smoother transition for businesses into the LLM world.

- Special Features: Advanced quota management, data privacy controls, and much more.

Got a suggestion or a use-case for pulze.ai? We’re all ears! Reach out at hello@pulze.ai.

Join the Pulze Family! We're growing, and if you're passionate about LLMs, AI, and enterprise solutions, we'd love to have you onboard. Follow us on Twitter, LinkedIn, and join our community for discussions and updates.

Summary

Large Language Models are a very powerful shift making machine learning accessible to a large audience. Still, building enterprise applications on top remains for a number of challenges discussed.

Pulze.ai aims to remove the complexities and make building LLM-based applications with enterprise requirements easy and affordable for everyone.

What Pulze.ai Brings to the Table:

- Simplicity at its Best: Use state-of-the-art LLMs seamlessly, backed by an intuitive REST API, ensuring rapid integrations.

- Optimized Prompt Routing: Our proprietary system ensures your prompts are directed to the most suitable model, maximizing accuracy and efficiency.

- Robust Infrastructure: Trust in pulze.ai's reliability, drawing from our experience in handling production-grade traffic across diverse domains.

- Always Up-to-Date: As the LLM ecosystem grows, so do we. Be it GPT 4 or Llama 2, rest assured you're always working with the latest.

The Pulze.ai Advantage:

- Easy Setup: Goodbye to the complexities of individual LLM provider setups. Pulze streamlines the process for you.

- Lightning-Fast Responses: Pulze's infrastructure is designed for agility, ensuring low latency and high-throughput interactions.

- Built for Enterprises: From centralized management to quota controls, pulze.ai is tailored for enterprise requirements.

This marks the beginning of our Pulze blog series. Stay tuned for insights, tutorials, and deep-dives into the world of LLMs and enterprise integrations.