2023: A Year in Review – Pulze, the Evolution and Impact of Generative AI, and Beyond.

In the ever-evolving landscape of artificial intelligence, the year 2023 has proven to be another transformative period for generative AI. As we reflect on the past months, this blog aims to provide a comprehensive recap of the key developments in the generative AI space, covering breakthroughs, industry trends, ethical considerations, and a glimpse into what has happened at Pulze.

In 2023, three major developments stood out in the field of generative artificial intelligence: the rise of Mixture-of-Expert (MoE) models, advancements in multi-modal models, and the expansion of context windows in language models.

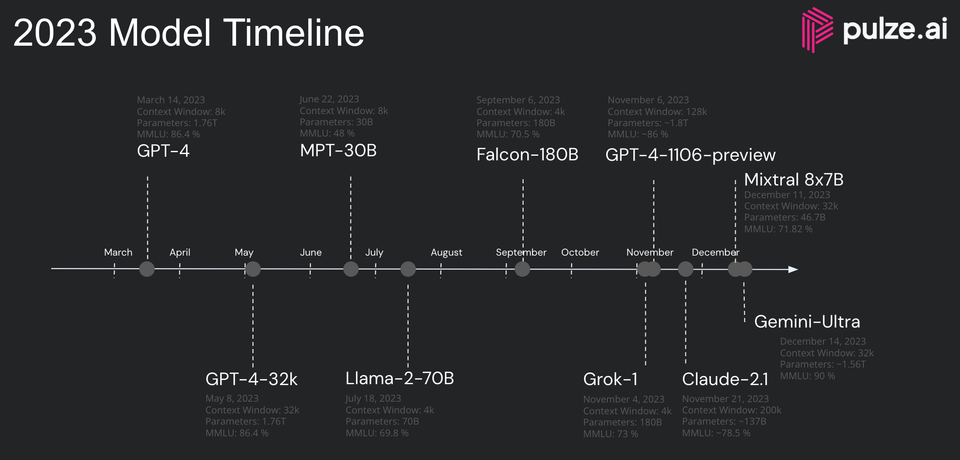

The year kicked off with Microsoft's significant investment in OpenAI, setting the stage for the GPT-4 launch. Contrary to initial beliefs, GPT-4 wasn't a single, densely packed model. Instead, it was revealed to be a composite of multiple models, each with 220 billion parameters, reminiscent of the shift from monolithic applications to microservices in software development. Similarly, GPT-4's structure is akin to MoE models, where various specialized components work in tandem.

Another breakthrough came from Mistral, who unveiled its "mixtral-of-expert" model, named mixtral-8x7B. At Pulze, our vision aligns with this trend, foreseeing a future where countless expert models collaborate. Our Pulze model exemplifies this approach, combining several large language models (LLMs) within a Mixture-of-Expert (MoE) architecture. This model stands as a pioneering example in the MoE domain, leading the way for future developments.

The year also witnessed a significant increase in the context window sizes of these models. This change aims to enhance their capabilities in retrieval-augmented generation (RAG). OpenAI took the lead by extending the context window of gpt-4-32k to 32,000 tokens, up from the previous 8,000. This expansion reached a new peak with gpt-4-1106-preview, featuring a 128,000-token window. Similarly, Anthropic's claude-2.1 model pushed the envelope further with a 200,000-token context window.

Additionally, 2023 has been a landmark year for open-source AI, highlighted by the release of Llama-2-70B and various other open-source models, further democratizing access to advanced AI technology. This blend of proprietary and open-source innovations exemplifies the dynamic and collaborative nature of AI development in the current era.

Milestones and Achievements

2023 marked a significant stride in generative models, with the emergence of cutting-edge technologies like;

GPT-4

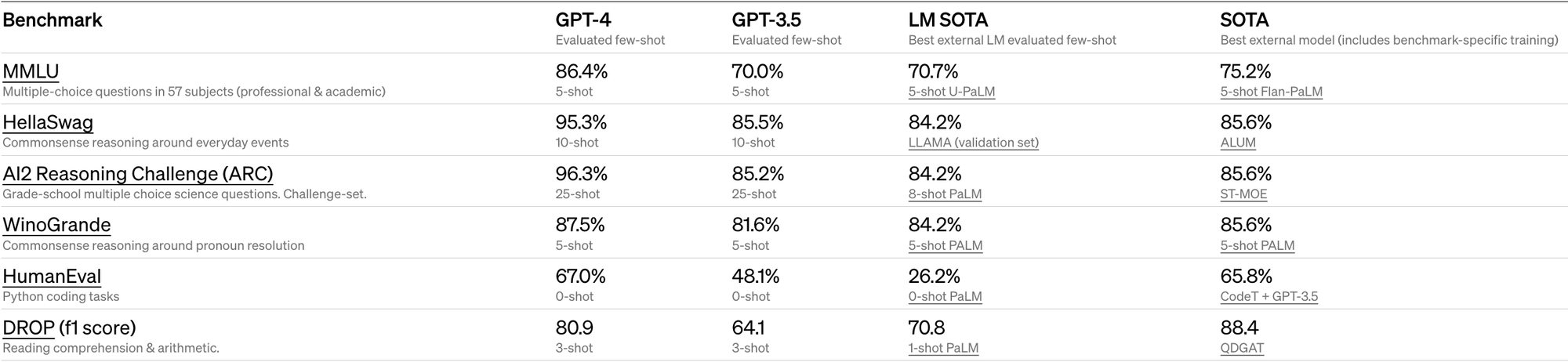

ChatGPT, a product of OpenAI, gained widespread acclaim since its debut in 2022. Its predecessors, GPT-1, GPT-2, GPT-3, and GPT-3.5, paved the way for its development. GPT-4, launched in March 2023, stands out with its ability to generate up to 25,000 words, comprehend images, and exhibit reasoning skills. It has the potential to revolutionize education and excel in subjects like Mathematics, Science, History, Literature, and Business.

GPT-4 introduces dual-stream transformers, processing both visual and textual information. It can analyze images, schematics, and generate contextually relevant text. The capabilities include completing sentences, describing images, understanding jokes, coding in multiple languages, and generating long-form texts for emails and legal documents. It set the industry standard upon its launch, outperforming its predecessor.

Gemini

Google DeepMind has introduced Gemini in December 2023, its advanced multimodal model designed to seamlessly handle various types of information. Gemini outperforms human experts in language understanding benchmarks (30 of the 32 widely used academic benchmarks). With a focus on responsible development, Gemini undergoes thorough safety evaluations, addressing bias and toxicity. Developers can access Gemini Pro via the Gemini API for experimentation - it will be available soon on our platform, being more efficient.

Mixtral 8x7B

Mistral recently launched 8x7B, which is a a high-quality sparse mixture of experts model (SMoE). It is said to outperform Llama 2 and GPT-3.5 on most benchmarks. But what is MoE? MoEs involve sparse layers with a defined number of "experts," each functioning as a neural network, and a gate network that determines which tokens are sent to which expert. MoEs allow for efficient pre-training with less compute, enabling the scaling of model or dataset size within the same computing budget as a dense model. Mixtral is presented with 8 experts and 7 billion parameters, showcasing benefits like faster pre-training and improved inference speed compared to dense models.

8x7B routes requests between 8 models to achieve the highest performance, validating the Pulze approach. From a non-technical standpoint you can see Pulze as a MoE for more than 30 LLMs, allowing us to beat every single model.

Multi-Modal Models

There has been a lot of buzz around LMMs, so let's first clarify what multi-modal models are. Multimodal can mean one or more of the following (Huyen, 2023):

- Input and output are of different modalities (e.g. text-to-image, image-to-text)

- Inputs are multimodal (e.g. a system that can process both text and images)

- Outputs are multimodal (e.g. a system that can generate both text and images)

Examples for LMM providers include Pika, Elevenlabs and Twelvelabs. The previously mentioned GPT-4 and Gemini models have multi-modal capabilities as well.

Pika is an idea-to-video platform that brings your creativity to motion. Its launch of Pika 1.0 created a lot of buzz on X - developers see it revolutionizing the way content is generated and shared. ElevenLabs is a voice technology research company specializing in the development of advanced AI speech software. Twelve Labs is a San Francisco-based startup specializing in training AI models for multimodal video understanding. The company's focus is on solving complex video-language alignment problems, allowing developers to create applications that can interpret and analyze both textual and visual components within videos.

Pulze sponsored a hackathon for multi-modal models; while it is hard to evaluate which model is the best (apart from the quality), Pulze will start offering the first multi-modalities next year on our platform.

Industry Trends

The year 2023 saw a widespread adoption of generative AI across various industries. Companies recognized the potential of these models to revolutionize their operations, leading to increased investment and integration. Collaborations and partnerships between industry players became more common, as organizations sought to combine their expertise for mutual benefit. Cross-disciplinary collaborations between technology and other sectors showcased the far-reaching impact of generative AI, making AI becoming a partner in the field of AI trust, risk and security management (Gartner, 2024).

However, amidst the surge in generative AI adoption, a notable consequence emerged—the risk of model lock-in. Companies, in their eagerness to embrace these cutting-edge technologies, found themselves dependent on a limited number of language model providers. This concentration poses challenges as organizations become tightly integrated with specific platforms, hindering flexibility and adaptability. The need for a balanced approach to technology adoption and ecosystem diversification has become a key focal point for industry leaders as they charted the course for the continued evolution of generative AI.

Challenges and Ethical Considerations

Amidst the excitement, the generative AI community grappled with challenges related to bias in models and the ethical use of AI. The industry responded with increased efforts to address these concerns, emphasizing the importance of transparency and fairness in AI development. Regulatory developments played a crucial role in shaping the ethical landscape, as governments and organizations sought to establish guidelines for responsible AI deployment.

Two of the major acts are the EU AI Act and the Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence from the US.

The European Union has reached a political deal on the Artificial Intelligence (AI) Act. Key highlights include the prohibition of certain AI applications deemed threatening to citizens' rights and democracy, with specific focus on biometric categorization, facial recognition, emotion recognition, social scoring, and AI manipulation. The Act outlines exemptions for law enforcement use of biometric identification systems, subject to judicial authorization and defined crime lists. High-risk AI systems face obligations, including mandatory fundamental rights impact assessments. General artificial intelligence systems must adhere to transparency requirements, with more stringent obligations for high-impact models. Measures to support innovation and SMEs include regulatory sandboxes. Non-compliance may result in fines up to 7% of global turnover. The agreed text awaits formal adoption by both Parliament and Council to become EU law.

President Biden's Executive Order aims to position the U.S. as an AI leader, including setting new standards for AI safety, urging Congress to pass privacy legislation, addressing equity concerns, supporting innovation, and ensuring responsible government use of AI. The order emphasizes collaboration with allies for a strong international AI governance framework. These actions align with the Biden-Harris Administration's commitment to responsible innovation, fostering a safe, secure, and trustworthy AI landscape in the U.S. and globally.

What Has Happened at Pulze

Reflecting on the past year, our journey has been marked by significant milestones and innovative features that have transformed our platform. In Q2 this year, we initiated our first launch that has shaped the course of our progress.

S.M.A.R.T - The LLM Routing Tool: We introduced S.M.A.R.T (Semantic Model Allocation Routing Tool), built atop our Knowledge Graph. This tool represents a revolutionary step in intelligent prompt-routing, acting as a sophisticated matchmaker between prompts and LLMs. This architecture positions us as pioneers in the Mixture-of-Experts domain, allowing the separation of expert routing from the model itself. This approach leads to enhanced cost savings, reduced resource footprint, and the agility to incorporate new models upon their release. This system, much like Google, adeptly navigates through a vast repository of information. However, instead of indexing web pages, it focuses on evaluating and leveraging different LLMs to deliver the most relevant and accurate responses. Mirroring Google's renowned PageRank algorithm, this platform employs a sophisticated method to rank and route queries to the most appropriate LLM. This ensures that the information provided is not only informative but also precise, effectively harnessing the diverse capabilities of various LLMs in a manner reminiscent of how Google optimizes and presents search results from the internet's extensive landscape. In many ways you can say we are the "Google for LLMs".

Expanded Model Catalog: Our platform now includes advanced models like Claude-2.1 and Mixtral-8X7B-v0.1, significantly enriching our pool of expert models. This expansion directly enhances the quality and accuracy of our S.M.A.R.T routing decisions, fostering a more democratic AI environment. Additionally, by incorporating a wider range of providers and models, including different versions of the same model, our S.M.A.R.T routing system gains the capability to identify the most cost-effective option available. This approach not only ensures cost efficiency but also enhances the platform's reliability and uptime. In scenarios of provider downtimes, our system seamlessly switches to alternative models, maintaining an impressive 100% uptime for many open-source models. This strategic expansion and integration reinforce our commitment to providing a robust, efficient, and reliable AI service to our users.

Grafana Partnership and LLM Observability Dashboard: We've partnered with Grafana and contributed to the development of the Pulze LLM Application Overview Dashboard. This tool offers an out-of-the-box solution for monitoring LLM applications. It provides a holistic view of model requests, costs broken down by custom labels, and troubleshooting capabilities—all within a single, comprehensive dashboard.

Unified Model Responses and Provider Price Incorporation: Our efforts have been focused on unifying model responses and seamlessly integrating price changes from various providers. This innovation significantly reduces time spent on model integration and switching between providers. Our users can now quickly incorporate new models through our fully qualified model path, maintaining a consistent response format, thereby addressing model lock-in challenges and saving valuable time.

Prompt Engineering: Our dedication to empowering users reached new heights with the introduction of prompt templating. This feature allowed users to tailor their interactions, customizing prompts to meet specific needs and extract optimal results.

Team: Behind these accomplishments stands our dedicated team, whose collective efforts and expertise have been instrumental in driving our platform's success. Their commitment to innovation and excellence has fueled our journey throughout the year and we've been growing a diverse team from all over the world.

Conferences: Our engagement in key conferences throughout the year has not only showcased our advancements but also provided valuable opportunities for learning and collaboration within the broader AI community. These conferences have been integral to our growth and the exchange of ideas that shape the future of our platform. Highlights include Observability Con in London by Grafana Labs and MLOps World in Austin.

Future Outlook

As we turn our gaze to 2024, the generative AI landscape appears poised for further evolution. Anticipated developments include enhanced model capabilities, broader industry adoption, and a continued focus on addressing ethical considerations. Emerging trends, such as the convergence of generative models with other AI technologies, promise to shape the trajectory of the field in the coming years. Challenges, too, are expected, requiring a collective effort from the community to navigate the complexities of this dynamic field. Stay tuned for our next blog in terms of what we expect to happen in more detail in 2024.

Conclusion

The year 2023 has been a remarkable chapter in the story of Pulze and generative AI. From breakthroughs in models to widespread industry adoption, the impact of generative AI has been felt across diverse domains. As we reflect on the past year, it becomes evident that the journey of generative AI is far from over. With a commitment to responsible development and an eye towards the future, the generative AI community is poised to unlock new possibilities and shape the next chapter in this exciting technological narrative.