Looking ahead: Pulze's forecast for 2024

In our last blog post, Fabian Baier, our Co-Founder and CEO, described 2023 as transformative for generative AI. Here, we summarize his thoughts, and look ahead to what 2024 has in store for GenAI and Pulze.

GenAI and Pulze in 2023

OpenAI launched GPT-4 in March, building on their GPT series with new capabilities like generating longer texts, understanding images, and demonstrating improved reasoning. In December, Google DeepMind unveiled Gemini, exceeding on several benchmarks and emphasizing responsible development. Additionally, Mistral introduced Mixtral 8x7B, a high-quality sparse mixture-of-experts model surpassing Llama 2 and GPT-3.5. Multi-modality for language models gained lots of traction with examples from GPT-4, Gemini, and other providers. Also, society grappled with challenges related to ethical use of generative AI systems. Regulatory developments, including the EU AI Act and the U.S. Executive Order on AI, try to address transparency, fairness, and responsible deployment.

Pulze launched S.M.A.R.T (Semantic Model Allocation Routing Tool), which leverages a knowledge graph for routing prompts to the most relevant models. This enhances cost savings, ensures the highest quality output, and enables agility in incorporating new models. We expanded our platform with advanced models like Claude-2.1 and Mixtral-8X7B-v0.1, enhancing the S.M.A.R.T routing decisions and creating a more democratic AI environment. The platform's capability ensures cost-efficiency, reliability, and 100% uptime. Additionally, we partnered with Grafana for LLM Observability, and contributed the Pulze LLM Application Overview Dashboard.

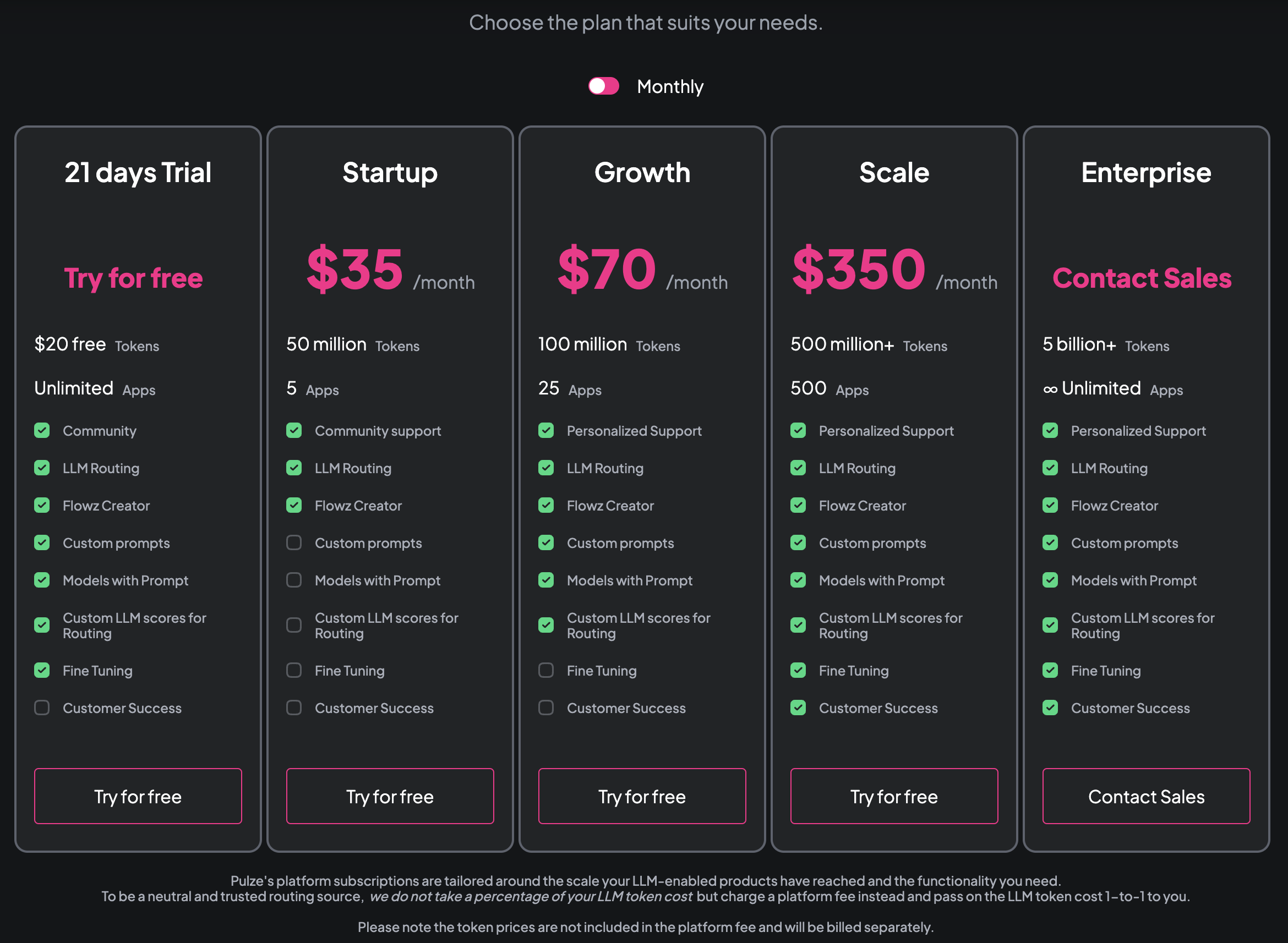

Pulze focused on unifying model responses and seamlessly integrating price changes from various providers. This reduces the time spent on model integration, addresses model lock-in challenges, and allows users to seamlessly incorporate new models. Right after, we introduced prompt templating, empowering users to customize interactions and prompts to meet specific needs. And last but not least, we updated our billing system by launching various pricing plans right before the end of year.

What's Next in genAI and LLMs/MLMs

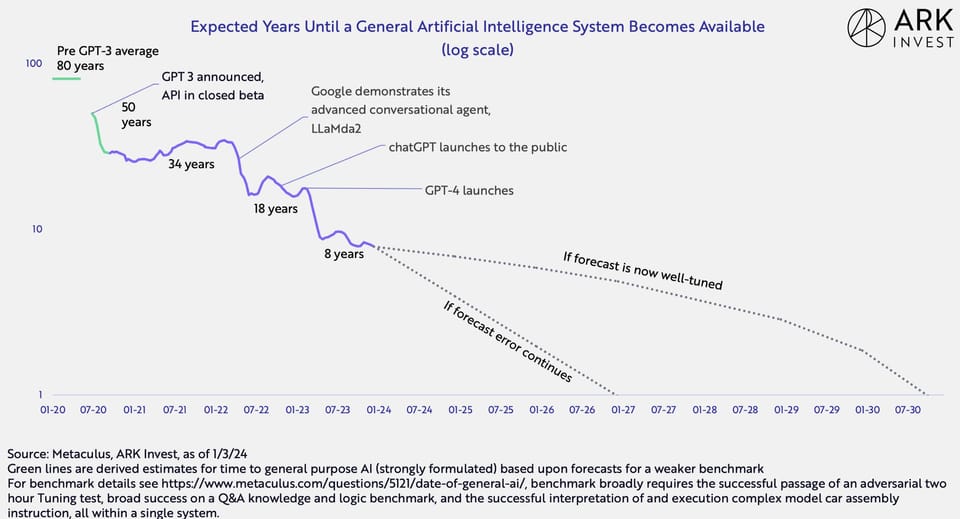

2024 will see significant advancements in large language models (LLMs) and the adoption of generative AI technologies. We expect the hype around LLMs, chatbots and genAI to decrease, the use cases and global adoption to increase, based on the huge potentials across every industry pointed out by McKinsey. As model functionalities continue to increase, LLMs will gain even more powerful capabilities when fine-tuned properly, eventually leading to breakthrough applications in fields like healthcare. However, with development in sensitive industries like healthcare, the government might step in and have an eye on further developments or applications in similar industries. There is no law that determines who's at fault when AI makes a false diagnosis. Therefore, regulation is to be expected (which is good). Additionally, regulators may impose new restrictions on certain advanced LLM applications to address ethical and risk concerns, such as datasets used for pre-training common models. Risk concerns became more public by the end of 2023. In late December that year, The New York Times filed a lawsuit against Microsoft and OpenAI, accusing them of copyright infringement and abusing the newspaper’s intellectual property (CNBC, 2023). Bias, ethics, and copyright issues will not only be an issue for 2024 but for many more years to come.

We are bullish that LLM routing will become standard practice for many companies. With startups focusing on applying LLMs through interfaces like chatbots and virtual agents and more enterprises recognizing the benefits of routing for higher quality at lower cost, adoption will increase. This has already led to an increased amount of startups working on routing tools and companies building routing tools internally. As a leader in this area, we will continue to drive this trend forward, continuously updating our S.M.A.R.T. routing solutions. In 2024, providers will focus on releasing LLMs with advanced features that elevate their abilities, like even better reasoning in terms of human-like thinking. Models will be able to understand longer texts thanks to larger context windows - this will be a continuing trend for a while. The faster and more affordable inference will make AI assistance more accessible to all users. These enhancements will provide versatile, high-quality AI systems tailored to unique individual needs.

Multi-modal functionality for LLMs, such as the ability to generate images, video, and audio from text inputs and vice-versa, will see major improvements. Advancements in how LLMs ingest and generate content across different media formats will lead to more realistic multi-format outputs. Lower costs will also make multi-modal AI a viable option for more businesses and individuals, especially on the commercial side in terms of marketing material and other promotions. In terms of B2C, with the astonishingly high quality of anime output from multi-modal language models, it would not surprise us to have an HQ anime series by the course of 2024.

Lastly, AI agents will start integrating seamlessly across websites and apps, making AI a natural part of digital experiences. Industries worldwide will also adopt generative AI as LLMs prove themselves reliable, standard tools on par with internet technologies. LLMs may even generate more online content than human users through increasingly sophisticated text, code, media, and more. 2024 promises to be another breakthrough year for generative AI integration into our digital lives and global economy.

What We Have Planned

As we look ahead to 2024, Pulze aims to maintain its position at the forefront of innovation in the generative AI space. Over the past year, we have made great strides in pushing the boundaries of what's possible with generative models and launched the first platform to automate smart routing. Our dedicated team of AI researchers and engineers will continue exploring new avenues for advancements across our product offerings.

Our model catalog will be enriched with cutting-edge additions in 2024. Researchers are exploring new frontiers in large language models, and Pulze aims to offer users access to the most advanced AI capabilities. This includes expanding our multi-modal offerings with the addition of first voice and later video and image models.

Community building will also remain a priority. Pulze is committed to fostering global connections between AI enthusiasts, experts and industry partners. Look out for initiatives like our second annual AI Hack Party, which brings the generative AI community together from around the world.

As with any advanced technology, responsible and ethical development is paramount. Pulze will continue addressing considerations like bias, transparency and accountability. New features and programs aimed at ensuring AI safety will keep our users informed.

Technologies like retrieval augmented generation will become more enterprise-ready and a feature that links multiple applications of users will be launched. Flowz, a tool to combine workflows, is a recently launched feature that will allow users to configure workflows for their applications easily using diagrams. In combination with “Prompts”, another recent feature, Flowz can help the user connect and filter data through different apps where each app might have a different set of prompts or selected models that are better for certain tasks. Our customers will also have the ability to quickly fine-tune best-in-class models to better suit their unique needs.

User growth in new industries will also be a key focus area. Through continuous improvements to provide a seamless experience, we're working to attract a wider audience to Pulze's platform and features. Our goal is to make generative AI more accessible and useful to organizations across all sectors, ultimately providing everyone using LLMs with maximum output quality at 100% uptime. To accelerate this, we will pursue further strategic partnerships and collaborations that expand the impact of our technology. Stay updated on our progress in this area through upcoming announcements on user base expansion.

In summary, 2024 looks set to be another groundbreaking year for Pulze and generative AI. Through ongoing innovation, partnerships and community focus, we aim to push the boundaries of what's possible and accelerate the benefits of this powerful technology.

Forecast in Summary

As Pulze reflects on the transformative year that was 2023, our focus turns to the future. We're excited about the possibilities that 2024 holds, with plans for feature launches, user-centric improvements, and a continued commitment to ethical AI. Join us on this journey as we shape the next chapter in Pulze's story and contribute to the evolution of generative AI. In 2024, advancements in Large Language Models (LLMs) and generative AI are expected to lead to widespread adoption across industries. Despite a decrease in hype, issues such as regulation, bias, ethics, and copyright will persist. Pulze plans to maintain its innovative position, expanding its model catalog and fostering global connections within the AI community. New functionalities, such as retrieval augmented generation 2.0 and Flowz, aim to enhance workflow configuration. Pulze is focused on user growth in new industries, pursuing strategic partnerships for wider impact. We are looking forward to continuing the journey that we are on. Stay tuned for detailed insights and updates throughout the year.